Journey into the Future with OpenShift Data Science

Introduction

Red Hat OpenShift Data Science (RHODS) is a comprehensive platform designed to streamline and enhance the process of building, training, and deploying machine learning models. It integrates seamlessly with OpenShift, providing a scalable and secure environment for data science workloads.

Unified Environment: RHODS provides a unified environment for data scientists and developers, allowing them to collaborate and work seamlessly across various tools and frameworks.

Model Building: Data scientists can leverage popular machine learning libraries and tools to build, train, and validate models within the platform. This includes support for frameworks like TensorFlow, PyTorch, and more.

Scalability: RHODS can scale horizontally and vertically to accommodate varying workloads, ensuring optimal performance even with large datasets and complex models.

Integration with Data Sources: It provides connectors and integrations to various data sources, enabling seamless data ingestion and preprocessing for machine learning tasks.

Security and Compliance: RHODS integrates OpenShift’s robust security features, ensuring that data and models are protected, and compliance requirements are met.

So, let’s start… :)

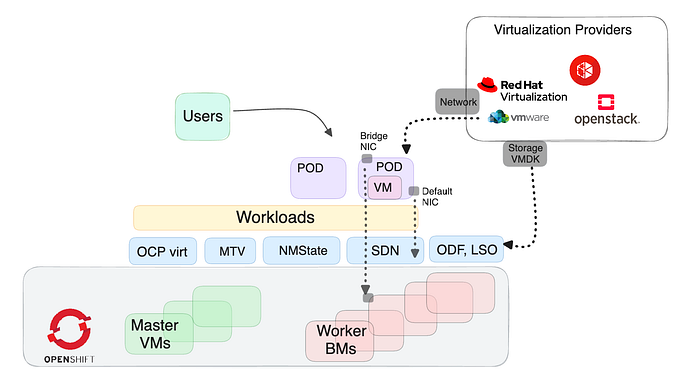

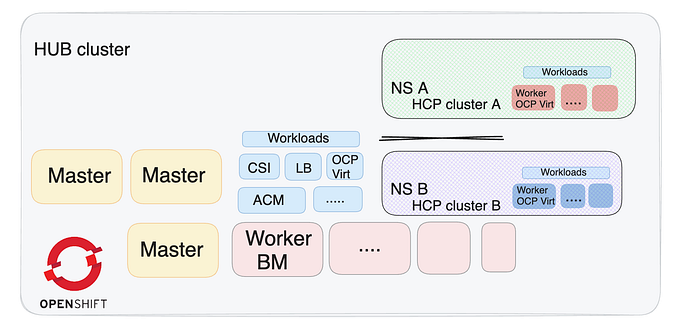

Architecture Overview

OpenShift Data Science Operator

Go to the Operator Hub to install the OpenShift Data Science Operator

RHODS by default gives the admin role to a group with the name ‘rhods-admins’, so you can add another admin group/user, or as I chose in this example, add my OCP admin user to this group.

Delete the RHODS pods, and wait until the Pods status is ‘running’

oc delete pod --all -n redhat-ods-operatorLog into the RHODS UI with the OpenShift user

Here’s what’s on the operator menu, for more information click here

Applications → Enabled

The Enabled page displays applications that are enabled and ready to use on Open Data Hub.

Applications → Explore

Click on a card for more information about the application or to access the Enable button.

Data science projects

The Data Science Projects page allows you to organize your data science work into a single project.

Data Science Pipelines → Pipelines

The Pipelines page allows you to import, manage, track, and view data science pipelines. You can standardize and automate machine learning workflows to enable you to develop and deploy your data science models.

Data Science Pipelines → Runs

The Runs page allows you to define, manage, and track executions of a data science pipeline.

Model Serving

The Model Serving page allows you to manage and view the status of your deployed models.

Resources

The Resources page displays learning resources such as documentation, how-to material, and quick start tours.

Settings → Notebook images

The Notebook image settings page allows you to configure custom notebook images that cater to your project’s specific requirements.

Settings → Cluster settings

The Cluster settings page allows you to perform the following administrative tasks.

Settings → User management

The User and Group settings page

The groups and privileges can be checked or configured here

Let’s add one more operator, which will give us the option to build Data Pipelines in full integration with ODS, for more information click here

So now we ready to use RHODS

First, create a RHODS project, thanks to the integration, once we create an ODS project, it is also created the project at the OpenShift cluster level

In our project, we will create a notebook from one of the base images that in the default options. Click on the ‘Create workbench’ button

In the wizard, you can choose the image, version, size, GPU if necessary, etc.

NOTE: By default, RHODS offers some notebook image options

Next, we have an option to add data connections (S3 bucket), so if you need this option like in my case let’s stop the creation of the notebook for a moment, we’ll go to the S3 provider in my case OpenShift Data Foundation (ODF) we’ll create a bucket and return to complete the process

(Option) Go back to RHODS and create a data connection

Click on the ‘Create workbench’ button

Our workbench was created but it isn't ready yet

Go to the workbench project in the OpenShift UI and wait until the Pods status is ‘running’

Once the pod ‘running’, go to the RHODS UI → Data science projects→ aelfassy → click on the ‘Open’ button

Our notebook is created and ready, let’s play

For example, here’s some sample Matplotlib code from jupyterbook.org, for more information click here

RHODS comes by default with images, right? However, what if required a special image?

Custom Notebooks

If you need a specific version of a library that is not provided in the base notebook images, for example, need a lot of Python packages and pip install tired you, or you can’t use root privilege for the pods.

To create custom images and start with a notebook that holds a base image that includes all the packages you need we have some options:

- Use Wizard and create a custom image

- Create a custom Dockerfile with your packages

Once the image is created import it to your RHODS

So let’s start with the first option

Clone the Wizard git repo (for more information click here)

git clone https://github.com/opendatahub-io-contrib/workbench-images.gitBased on your choices, the wizard will create the recipe and give you instructions on how to build the image.

Launch the wizard: ./interactive-image-builder.shand answer the questions. For example:

cd custom-images/cuda-jupyter-pytorch-c9s-py311_2023c_20230930We are ready to create our image. (You can see that before the process we had no images on the machine)

NOTE: This process can take several minutes (depending on the size of the image)

podman build -t workbench-images: cuda-jupyter-pytorch-c9s-py311_2023c_20230930 .

Once the process is complete and our image is ready, we will tag it according to our registry so that we can pull it later

podman tag 3da546102969 quay.io/aelfassy/images/workbench-images

Push the image to your registry, in my case Quay

Now, import the custom image to RHODS. Go to the Settings → Notebook images → click on the ‘Import image’ button

NOTE: You can add information about versions and packages included in the image to easily identify it later. (in my example 1.3)

The image was imported and you can use it as a base

Let’s create a notebook with the custom image

Go to the workbench project in the OpenShift UI, Once the pod ‘running’, go to the RHODS UI → Data science projects→ aelfassy → click on the ‘Open’ button

Our notebook is created and ready

The second option — Create a custom Dockerfile and build the image from it

CUDA image Dockerfile for example (for more information click here)

# Start from CentOS Stream 9 + Python 3.9, ref: https://github.com/sclorg/s2i-python-container

FROM quay.io/sclorg/python-39-c9s:latest

# Switch to root to be able to install OS packages

USER 0

##################################

# CUDA Layer: CUDA+CuDNN+Toolkit #

##################################

# 1. CUDA Base

# ------------

ENV NVARCH x86_64

ENV NVIDIA_REQUIRE_CUDA "cuda>=11.8 brand=tesla,driver>=450,driver<451 brand=tesla,driver>=470,driver<471 brand=unknown,driver>=470,driver<471 brand=nvidia,driver>=470,driver<471 brand=nvidiartx,driver>=470,driver<471 brand=geforce,driver>=470,driver<471 brand=geforcertx,driver>=470,driver<471 brand=quadro,driver>=470,driver<471 brand=quadrortx,driver>=470,driver<471 brand=titan,driver>=470,driver<471 brand=titanrtx,driver>=470,driver<471 brand=unknown,driver>=510,driver<511 brand=nvidia,driver>=510,driver<511 brand=nvidiartx,driver>=510,driver<511 brand=geforce,driver>=510,driver<511 brand=geforcertx,driver>=510,driver<511 brand=quadro,driver>=510,driver<511 brand=quadrortx,driver>=510,driver<511 brand=titan,driver>=510,driver<511 brand=titanrtx,driver>=510,driver<511 brand=unknown,driver>=515,driver<516 brand=nvidia,driver>=515,driver<516 brand=nvidiartx,driver>=515,driver<516 brand=geforce,driver>=515,driver<516 brand=geforcertx,driver>=515,driver<516 brand=quadro,driver>=515,driver<516 brand=quadrortx,driver>=515,driver<516 brand=titan,driver>=515,driver<516 brand=titanrtx,driver>=515,driver<516"

ENV NV_CUDA_CUDART_VERSION 11.8.89-1

COPY cuda.repo-x86_64 /etc/yum.repos.d/cuda.repo

RUN NVIDIA_GPGKEY_SUM=d0664fbbdb8c32356d45de36c5984617217b2d0bef41b93ccecd326ba3b80c87 && \

curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/rhel8/${NVARCH}/D42D0685.pub | sed '/^Version/d' > /etc/pki/rpm-gpg/RPM-GPG-KEY-NVIDIA && \

echo "$NVIDIA_GPGKEY_SUM /etc/pki/rpm-gpg/RPM-GPG-KEY-NVIDIA" | sha256sum -c --strict -

ENV CUDA_VERSION 11.8.0

# For libraries in the cuda-compat-* package: https://docs.nvidia.com/cuda/eula/index.html#attachment-a

RUN yum upgrade -y && yum install -y \

cuda-cudart-11-8-${NV_CUDA_CUDART_VERSION} \

cuda-compat-11-8 \

&& ln -s cuda-11.8 /usr/local/cuda \

&& yum clean all --enablerepo='*' \

&& rm -rf /var/cache/yum/*

# nvidia-docker 1.0

RUN echo "/usr/local/nvidia/lib" >> /etc/ld.so.conf.d/nvidia.conf && \

echo "/usr/local/nvidia/lib64" >> /etc/ld.so.conf.d/nvidia.conf

ENV PATH /usr/local/nvidia/bin:/usr/local/cuda/bin:${PATH}

ENV LD_LIBRARY_PATH /usr/local/nvidia/lib:/usr/local/nvidia/lib64

COPY NGC-DL-CONTAINER-LICENSE /

# nvidia-container-runtime

ENV NVIDIA_VISIBLE_DEVICES all

ENV NVIDIA_DRIVER_CAPABILITIES compute,utility

# 2. CUDA Runtime

# ---------------

ENV NV_CUDA_LIB_VERSION 11.8.0-1

ENV NV_NVTX_VERSION 11.8.86-1

ENV NV_LIBNPP_VERSION 11.8.0.86-1

ENV NV_LIBNPP_PACKAGE libnpp-11-8-${NV_LIBNPP_VERSION}

ENV NV_LIBCUBLAS_VERSION 11.11.3.6-1

ENV NCCL_VERSION 2.15.5

ENV NV_LIBNCCL_PACKAGE ${NV_LIBNCCL_PACKAGE_NAME}-${NV_LIBNCCL_PACKAGE_VERSION}+cuda11.8

RUN yum install -y \

cuda-libraries-11-8-${NV_CUDA_LIB_VERSION} \

cuda-nvtx-11-8-${NV_NVTX_VERSION} \

${NV_LIBNPP_PACKAGE} \

libcublas-11-8-${NV_LIBCUBLAS_VERSION} \

${NV_LIBNCCL_PACKAGE} \

&& yum clean all --enablerepo='*' \

&& rm -rf /var/cache/yum/*

# 3. CuDNN

# --------

ENV NV_CUDNN_VERSION 8.6.0.163-1

ENV NV_CUDNN_PACKAGE libcudnn8-${NV_CUDNN_VERSION}.cuda11.8

LABEL com.nvidia.cudnn.version="${NV_CUDNN_VERSION}"

RUN yum install -y \

${NV_CUDNN_PACKAGE} \

&& yum clean all --enablerepo='*' \

&& rm -rf /var/cache/yum/*

# 4. CUDA Devel (Optional)

# ------------------------

#ENV NV_CUDA_LIB_VERSION 11.8.0-1

#ENV NV_NVPROF_VERSION 11.8.87-1

#ENV NV_NVPROF_DEV_PACKAGE cuda-nvprof-11-8-${NV_NVPROF_VERSION}

#ENV NV_CUDA_CUDART_DEV_VERSION 11.8.89-1

#ENV NV_LIBNPP_DEV_VERSION 11.8.0.86-1

#ENV NV_LIBNPP_DEV_PACKAGE libnpp-devel-11-8-${NV_LIBNPP_DEV_VERSION}

#ENV NV_LIBNCCL_DEV_PACKAGE_NAME libnccl-devel

#ENV NV_LIBNCCL_DEV_PACKAGE_VERSION 2.15.5-1

#ENV NCCL_VERSION 2.15.5

#ENV NV_LIBNCCL_DEV_PACKAGE ${NV_LIBNCCL_DEV_PACKAGE_NAME}-${NV_LIBNCCL_DEV_PACKAGE_VERSION}+cuda11.8

#RUN yum install -y \

# make \

# cuda-command-line-tools-11-8-${NV_CUDA_LIB_VERSION} \

# cuda-libraries-devel-11-8-${NV_CUDA_LIB_VERSION} \

# cuda-minimal-build-11-8-${NV_CUDA_LIB_VERSION} \

# cuda-cudart-devel-11-8-${NV_CUDA_CUDART_DEV_VERSION} \

# ${NV_NVPROF_DEV_PACKAGE} \

# cuda-nvml-devel-11-8-${NV_NVML_DEV_VERSION} \

# libcublas-devel-11-8-${NV_LIBCUBLAS_DEV_VERSION} \

# ${NV_LIBNPP_DEV_PACKAGE} \

# ${NV_LIBNCCL_DEV_PACKAGE} \

# && yum clean all --enablerepo='*' \

# && rm -rf /var/cache/yum/*

#ENV LIBRARY_PATH /usr/local/cuda/lib64/stubs

# 5. CuDNN Devel (Optional)

# ------------------------

#ENV NV_CUDNN_VERSION 8.6.0.163-1

#ENV NV_CUDNN_PACKAGE libcudnn8-${NV_CUDNN_VERSION}.cuda11.8

#ENV NV_CUDNN_PACKAGE_DEV libcudnn8-devel-${NV_CUDNN_VERSION}.cuda11.8

#LABEL com.nvidia.cudnn.version="${NV_CUDNN_VERSION}"

#RUN yum install -y \

# ${NV_CUDNN_PACKAGE} \

# ${NV_CUDNN_PACKAGE_DEV} \

# && yum clean all --enablerepo='*' \

# && rm -rf /var/cache/yum/*

# 6. CUDA Toolkit

# ---------------

# Install the CUDA toolkit. The CUDA repos were already set

RUN yum -y install cuda-toolkit-11-8 && \

yum -y clean all --enablerepo='*' \

&& rm -rf /var/cache/yum/*

# Set this flag so that libraries can find the location of CUDA

ENV XLA_FLAGS=--xla_gpu_cuda_data_dir=/usr/local/cuda

##################################

# OS Packages and libraries #

##################################

# 1. Standard packages

# --------------------

# Copy packages list

....

WORKDIR /opt/app-root/src

ENTRYPOINT ["start-notebook.sh"]

Conclusion

This article showcases the creation of a workspace for researchers step by step, leveraging existing notebook images, using S3, GPU, and more.

Additionally, the creation of a specialized custom notebook image. This image, designed to address intricate needs, mirrors the adaptability of RHODS to diverse and demanding research scenarios.

I hope you found this article informative and that you are excited to try RHODS :)